Labbing Virtualization

I wanted to start this series of articles to explain how Labbing (for me) has evolved a bit over the past few years. I’m also going to publish some other articles covering some more “advanced features” of EVE-NG; like getting wireless onto a Windows host, bridging physical equipment into your EVE-NG Topology, registering a Cisco WAP onto a vWLC, registering a physical phone into a Virtual Call Manager Instance, and some basic ISE setups. Since I’m helping mentor a couple of people with studying for their Enterprise Infrastructure studies (formerly the Routing and Switching track) and these topics and questions seem to be popping up a bit for me. “Hey how did you get a Windows 10 Node in EVE to associate with a physical AP, and tie that AP back to a virtual Wireless Controller that’s running as a node inside of EVE?” Since I know a few things and have gotten this stuff working in the past, I figured I would share with the rest of the class… Note: This specific article is aimed at trying to help explain what Server Virtualization is and how I currently have my EVE-NG setup. In the next couple of articles we'll dive into EVE-NGs setup and passing through devices.

So… “Back in my day”… We (I) had 48U Server racks, an eBay account and a very strong relationship with Con-Ed (power company). No seriously… I had a 48U rack in the basement (BX rack pictured below) where I used to cook up labs, yell at at all hours of the day and night, do a bunch of config testing, verification, and cause a few spanning-tree and/or routing loops.

But rejoice people of today! No longer are you stuck with just having the physical option for labbing. And you don’t have to size your living situation to fit a rack for keeping you company (or warming you up). Nowadays other labbing platforms, specifically virtual ones like EVE-NG, have stepped into the scene to help alleviate the “relationship” with your power company as well as help avoid the current shipping disaster that most of us in the North East have come to expect around the holiday season, or just in general, while helping you lab out some concepts and topics you may be studying or just curious about. I find, for myself at least, that there is a happy medium (in regards to labbing) between having all physical gear, and just a virtual lab.

So, before we jump into the “advanced setups and features” for passing stuff through to the EVE Nodes though… Let’s take a look at what my current labbing host setup has evolved into. Hopefully you have a similar setup, are building one, are just curious what labbing virtualization is or can fill in some of the gaps by Reverse Engineering what we will be going over… (OK, enough plugging of my own site…)

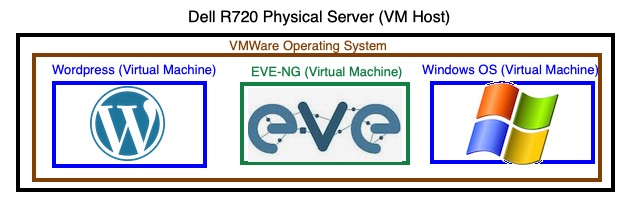

So for the server hardware in my current setup… I have a single Dell R720 rack-mount server that’s doing quite a bit of work on my behalf. (Spoiler alert: You're reading this post on it.) It has multiple VMs running on it, so I had sized it out accordingly. The server has VMWare ESXi as its OS running on the bare metal of the box. One of the VMs running/installed/deployed within VMWare is EVE-NG. This is where I have successfully been able to pass through multiple physical devices and peripherals that are plugged directly into the server; by googling, bothering my brother for the other half of my brain, or just brute force or luck; to Nodes in EVE-NG. If your head is spinning, it’s fine. Let’s peel this back a little more.

So what is server/hardware virtualization? And why do we care? Well, we start with a physical server. This is something you can walk up to and touch with your hands. Pretty straight forward, right? In my case, my Physical Server is a Dell R720 Server. The server has the makings/configuration of an arguably small “hyper-converged” setup depending on who you ask. The server itself has the following specs: Two 12 core CPUs (giving me about 48 threads for VMs), 384gb of RAM, about 80TB of raw direct attached storage, and for networking both 10gb and 1gb adapters for communication. This server runs around 20vms concurrently, hence the hefty resources above. One of the Virtual Machines running on my Physical Server is EVE-NG. Currently, I am running EVE-NG Pro however, full disclosure, I did successfully pass-through the peripherals we will be discussing in later articles using EVE-NG Community Edition.

So now that we have the physical layer defined (the server you can touch with your hands), let’s unpack briefly what VMWare is and does. Another disclaimer… I am not a “VMWare guy”. I learned VMWare on the job and I’m fairly certain there are many other people who are much better at it than I am. So I will explain VMWare as best as I can for this circumstance of where it fit’s in the labbing equation for myself.

So, every computer/server/device is running an Operating System (OS) of some sort. The purpose of an OS, is to actually process and interact with inputs and outputs of the peripherals, devices and user(s). VMWare allows you to share all of the physical computer/servers resources available to the physical server (aka the VM Host/Dell Server) between multiple “guest operating systems”, also known as Virtual Machines (VMs). OK let’s take a breather here… So we have my Dell Server aka my Virtual Host. Inside of my Virtual Host, I have peripherals such as Memory, CPUs, Storage, Network Cards, PCIe Devices, USB controllers, etc. that are seen as resources by VMWare. By leveraging VMWare as the Host’s OS, I can instruct VMWare to divvy up the resources that the VM Host has to offer, as needed, to any Guest OS (Virtual Machine) that is running on it. I promise, it’ll get easier to follow shortly. Hopefully… I’m a fan of pictures so here goes nothing…

So, the black colored rectangle is the physical server (VM Host) that you can walk up to and touch. The brownish colored rectangle represents VMWare, which is the OS running on the VM Host (physical server). The blue and green boxes represent the VMs (Guest OSes) running inside of VMWare as independent machines. These VMs (blue and green) all are given resources by VMWare (brown) from the available resources present in the Physical Server (black). Hopefully that makes a little more sense to you now. Feel free to scratch your head at this point or re-read this section a couple of times and refer to the diagram above.

Now that we have our key components: The VM Host (the Dell server), the VM Host’s Operating System (VMWare) and the Virtual Machines (VMs), let’s kick it up a bit to what EVE-NG actually is, with regards to Server Virtualization. Put bluntly, EVE-NG is a virtualization platform (just like VMWare is in my example above). EVE-NG can run multiple nodes/images concurrently within itself with the resources it has available. So as you can probably imagine, EVE-NG is one of my larger VMs that run on my host. The sizing of the EVE-NG VM for you, will depend on the workload you are trying to accomplish, as well as the available resources (CPU, Memory, Storage, Network(s)) that you have inside of your Host. Sidenote, with EVE-NG Pro, you need to set your CPU settings properly as your licensing is tied to your deployment. For more information... Check their cookbook here.

If you haven’t guessed by now I do A LOT of full scale labbing, so my actual setup for EVE-NG is setup with following resources: (again you may have to tinker with yours to get it just right)

- 12 CPUs (1 Core per Socket with 12 Sockets)

- 96 Gigs of RAM

- A 50GB OS Partition

- 1 TB of additional space for labs, images and what-have-you

So just to set us up for Part 2 of this post, let’s recap what we have here. We have a single VM Host (Dell Server) running VMWare ESXi as it’s OS (Operating System) with a bunch of resources available for the Virtual Machines (VMs) running inside of VMWare to utilize. So, part 2 of this series will dive into getting you to wrap your head around nested Hypervisors.

Hopefully this helps you understand what Server Virtualization is at a 1000ft view and shed some light on how you can look at building your own Virtual labbing setup and how I have set mine up as well.

Stark out.

2 thoughts on “Labbing Virtualization”

Comments are closed.