1 trap – gnireenignE esreveR

For those of you who looked at the title of this article and may have thought that I finally have lost what was left of my mind… You may be right. However; assuming you’re still reading this, you may be curious to know whether or not the title of this article was produced by misfiring of neurons in my brain; or if there’s something else in play here.

So let’s dive in and see what this article’s title actually means. “1 trap – gnireenignE esreveR” or “Reverse Engineering – Part 1” (see what I did there?) is an article that will attempt to discuss the one skill that is often sought after by many of us engineering folks of how to “fill in” what was omitted from your “all inclusive documentation” that you were provided or to help sooth the inquisitive mind from wondering “how the heck is this thing REALLY working?” So what is “Reverse Engineering” and more importantly “why do I care?” Well, I’m glad you asked. To me, Reverse Engineering is the process of looking at how something is operating normally and from that point you reverse the process (typically back down the OSI model or backwards across the network/firewalls/nats/hops/etc) until you get to either the point of inception or you run into the broken section of the process. Being able to reverse engineer a problem or something that has been implemented will also increase your troubleshooting skills, not to mention it instantly adds to your street cred. Ok, so maybe it won’t do that… Lol.

I’ve been known to approach many issues this way, “Backwards”. I think this is due to the fact that most of us live in a brownfield deployment world, as opposed to a greenfield one. Just to catch up our not-so-tech-savvy peeps up; a brownfield environment is a previously deployed infrastructure/network, typically where you are working now has already been setup prior to you being employed. Another example is growing your network by being part of a corporate merger. And who can forget the ever-so-present “migration” time between two technologies, two or more vendors or anything that essentially is preexisting on an infrastructure that you want to improve.

However, you do actually have an advantage with reverse engineering in a brownfield environment, since typically (unless you’re called to tshoot a new issue on day 1 or are on a specific contract job) you get to see the network in it’s “normal operating state”. Documentation helps, but actually thinking about how something is supposed to travel or work vs how it’s actually arriving at it’s destination is almost always different. You also can confirm the paths of layers 1-3 with sufficient access to a devices CLI. At most of my positions within the networking industry, I have been known to produce detailed network diagrams that can cover a large wall without leaving my seat. Understanding what switchports have multi-macs on them, what IP is tied to what firewall, it’s mac address and whether or not it’s an access, trunk or a Routed port can prove to be quite helpful when trying to diagram a datacenter when all of the cables are a single color. CDP of course is very good (I know everyone just exhaled and says “ugh… cisco”); if it’s not available, check to see if you have LLDP enabled. If neither are available, start checking for multi-macs (multiple macs) per switchport as that can help as well. Perhaps this will help you say “hey why are there 20 macs/hosts behind this port?” You can work your way back up the OSI model saying ok mac addresses A, B and C are in vlan X; let’s arp them and see what they are. Time consuming? You bet. Worth it? One-Hundred and fifty percent…

I think this is something much better shown and then you can think about how to apply these principles to either an issue you’re facing, something that you just aren’t comfortable with or even a new deployment. Here are are a couple of examples of troubleshooting that drove me a little nuts. More to come as I get some time to write them out.

Example one: “Hot Pockets!” I mean “Hot Packets!”

I can still remember the call “Pete can you head up to CAMPUS-X and troubleshoot the Vendor-Q Wireless deployment you put in. Everyone is saying it’s slow all the time.” So I drove up, hopped on the wifi and did some quick pings to my gateway, my exit point (transit network before the NAT or Internet) and also pinged my DNS servers. See that kinda stuff, to me is reverse engineering. Since all of the laptops were in use at the time of me getting to the School to troubleshoot, you kind of have to poke the network a bit. Having a solid foundation of “what is supposed to happen” or even a good idea of “Ok, all of the WAPs are new, they all in this building terminate to closet X” leads me to ask myself “Ok let’s check the physical path out”. Hop on the IDF switch, no errors on the ports going to the WAPs, or off of the IDF switch(es) to the MDF; or to the controller’s uplink. So the infrastructure cabling (layer 1) is pretty much out as the issue. Next, am I extending these vlans anywhere else? What are my ARP timers for this Vlan? Ok, those checked out. My laptop was showing Zero signs of issues. So I continued to test and verify what was left of my sanity. One of the wired workstations in a computer lab in that building opened up so I logged on and started testing application performance. It seemed to all check out. As I signed out of the computer the onsite tech came over and said “oh good you’re here. It’s happening again”. We double timed it to the class room and I when we got there, the machine was recovering. It was “working better”. Now bear in mind, this wasn’t a university, I think it took us 3 maybe 4 minutes to get from the library over to the classroom wing.

Three other people also were convinced I was wearing an Access Point because “as soon as you came in, it started working”. So then I started doubting my original metrics from before. So I hopped onto one of the laptops on the cart and started bench-marking. They were withing 1ms of my original tests for latency. The Wireless Controller wasn’t very forthcoming with any syslogs or SNMP traps saying “hey I’m broken, please do this”. So I started watching some YouTube videos and opening a few more tabs in Internet Explorer (yes, this was in before 2010) to stress the WiFi connection out a little. Everything seemed ok. And then suddenly, it’s like someone turned the wifi off. My laptop dropped the connection. All of the SSIDs had disappeared. I did a quick survey in the room I was in and 75% of the laptops also dropped off the map so to speak. I plugged my laptop back into the network and logged back into the IDF switch. All the WAPs were up, the controller was up. According to my original tests, my layer 1 path-ing was sound… Or was it? Sometimes you’re so convinced that Vendor X hates you. Or that someone intentionally DoS-ing the network, but that usually is not the fact. So I needed a mental break from the troubleshooting sessions we were doing and I sat in the faculty lounge and was having a soda. A couple of the faculty members recognized me and we were talking a bit about the WiFi issues. And someone opened the fridge and heated up their lunch, and the the next person did the same thing about 2 mins, and this pattern continued. I think the foul smell of someones overcooked re-heated lunch finally hit me. Something clicked in my head, I looked at the time on the laptop and it was 12:40pm. I said out loud “wait, these laptops are wireless.” Two of the faculty members looked over at me like “and the earth rotates around the sun. Good job there Einstein…” I had realized all of the “path vetting” I was doing was assuming that the laptops were wired. For example I was checking from the access points to the controller, the to the gateway and then the internet… Not from the laptop to the Access point. As I was thinking about this, and writing it down on my notepad, I heard “beep, beep, beep”. And someone got up and popped the microwave open. Then I said “you gotta be s****ing me”. They thought I was talking about their horrendous re-heated lunch and gave me a stank eye look. I hopped onto the wireless controller and noticed that a cluster of the WAPs were all on Channel 11. I pulled up the mapping tool I used to do the deployment in the summer (you know when the schools are empty) and located the WAPs, and then changed their channels to 1 and 6.

Then I just hung out in the hallway between the four classrooms that were having an issue. And just like that the issue was gone. Something so insignificant of re-heating lunch in the faculty room (where there were 2 or 3 Microwaves) can reek havoc on your design, troubleshooting and user experience. Moral of the story… Don’t allow anyone to use Microwaves. No. When you’re planning for wireless, make note of the building materials, and any appliances. Also, be aware of the path. Wired and wireless are two different beasts.

Example two: “the infamous 1:15pm Network-Wide slowdown”

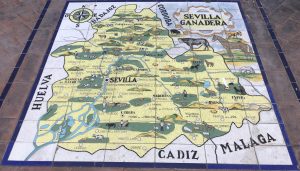

The company I was working for (Company A in this example) at this time, was essentially pushing out the previous company (Company B) after Company B had a lengthy support contract with the institution I was now supporting. So let’s just say Company B was not exactly fond of losing the contract to us nor very forthcoming with documentation. So with very little documentation turned over to us, I started managing the existing network. I was pretty green in the “networking” game as this was pretty early on in my career. I’m not going to lie to you. The Network was a larger multi-campus switched network. All of the SVIs lived on the district’s core switch for all buildings. Yea, not the best design but that’s not what this article is about lol.

So, about 2 or 3 months into supporting this network we started to get reports from almost every campus at about 1:15pm that everything “slows down” or actually “sucks”. So, being new to networking and having a CCNA, I was clearly an expert. (I wasn’t an expert). So when I started working at this location, I had checked the spanning-tree pathing and it seemed to be correct. What I mean by that is the Core was in charge of Layer 2 when I started. So I guess it’s not a spanning-tree problem. So I started walking around each building during the 12:00pm and 1:00pm hour with my laptop checking the monitoring system. Nothing really stood out. I went through the lower schools, and worked my way back up to the High School (where the office was). As dumb luck would have it, I was in the core switch updating descriptions as I had just brought up a replacement switch and it’s new fiber links in another building and all of a sudden my SSH session froze. Like dead dead. Like, omg what’s going on I’m a CCNA and this kind of torture is not very nice…

After about a minute (what’s the default spanning-tree timers again?) I got back into the core switch and checked the memory and processor/cpu and everything seemed to be good. My access to the internet was noticeably slower, even though at the time we were running on a 10mbps bundle for all of the sites. Yes, as stated, this was early on in my career. And in academia… So, for me to notice it was slow was saying something. lol. I had run the show spanning-tree root command on the replacement switch and it was pointing toward the core (the only other switch it was connected to) so I figured hey, let me run the same command on the core again just triple check. And apparently the Root bridge had moved. I ran it again. The root bridge was still not on the core. How could this be? I verified this myself. Did someone steal my credentials and start playing with spanning tree? Well… Not exactly. Let’s go down the rabbit hole…

So what causes a spanning-tree election to happen? Anyone? Ok so… Any switch/bridge topology change on a non edge (portfast enabled) port. Well, I had just brought up a replacement switch in that new location, but that switch was not claiming to be the root. So that one was off the hook. The spanning-tree root now was something toward the high school’s distribution switch. So I hopped on there, and there it was, the “Root Port”. It was on a classroom jack. I went and chatted with the CTO in his office and we got a port-map and I went to the classroom about 10 minutes later. The room was empty (no one was in it) as the period had changed. The computers didn’t look disturbed, and nothing was hooked up to any of the non PC walljacks. I made my way down the crowded hallway back to my office and jotted down the time, classroom number and port number. I logged back into the core switch to check the spanning-tree root and all of a sudden another port off of another high school distribution switch was now the root bridge. So this time, I had the port map handy from the CTO and went to that network closet. I traced the cable back to the jack number and cross referenced it to classroom Y let’s say. I went to Classroom Y and everything seemed to be normal. A teacher was there teaching, no other cisco switches in sight, no gremlin grinning like a creep or pointing and laughing at me. Just a couple of PCs with N-Computing attached and the rolling laptop cart that I had built a couple of weeks prior.

I asked the teacher if there was any weirdness on the network or anyone plugging in stuff or playing with wires but to my surprise, I heard “actually the laptops are really fast, but thanks for checking”. So, because I’m me, I didn’t believe this report. I went back to my office and said “Ok let’s say ‘the laptops are really fast’ “. I checked for the spanning-tree root again and confirmed it was back in this guys classroom. Ok either my manager is really playing a bad joke on me, or I’m missing something. So I grabbed the MAC of the so called “root bridge” ARPed it and had an IP. Like most Network Engineers, I pinged it. All 4 came back successful. I said ok, let’s look you up in the MAC Vendor Database online. “Dell Networks”. Dell Networks? What are you talking about? Then I heard in my head the teacher “the laptops are actually really fast”… So I popped the IP into my web browser and boom. Up came the Wireless AP I had configured a couple of weeks ago. I’m like but why is this thing participating in Spanning-Tree. Even if I’m not running this port as an edge port (portfast), after the election happens, the core should just take back over. Although it was the same “rolling laptop” rack that we had for that wing of the high school.

So I started going through the “Advanced” settings and noticed that this Wireless Access Point was also a Wireless Bridge. And as a Wireless Bridge it happened to have 802.1d enabled. Drum-roll please… I immediately facepalmed. It was between periods at this point of the day, so I disabled the Spanning-tree setting and the network hiccuped again. You know, when you start saying oh man… What did I just disable? But it came back and my session didn’t even die to the core this time. Ok cool, progress. I left it setup this way and figured the next day I’d check back in. As I was confident that I had beaten this issue to death and fixed it.

The next day, things were much better, but still some people off of the High School Campus were experiencing some serious delays with file access, logins, general network as well as internet access. So I threw my troubleshooting hat back on and went onto the High School switch and checked the spanning-tree root; it was pointing at the core. I grinned a bit. I hopped by mistake onto the new replacement switch I had put in and ran the same command and the output was puzzling “This bridge is the root”. Wait, the new switch? I logged onto the core, “my root port is facing the new switch”. But how can this happen? I fixed this issue. What the heck?

After some investigation and reading the same sections over and over of my Cisco Press book I started checking everyone’s spanning-tree priority. It turns out, everyone’s priority was the same. This was mistake number 1. But let’s continue… So, the replacement switch I had put in had the lowest mac address value. Aka… I’m in charge so send me all of your layer 2 traffic. This accounted for the slowness off-campus, since we had all redundant 1 gig lines between the buildings on the High School campus, but the other schools and buildings had lower bandwidth. I set the core switch manually back to the root bridge for all vlans by giving it the value of 0. After another re-convergence, the issue(s) were resolved.

So, don’t let layer 2 get-cha down! Always ALWAYS Always!!!! Verify what you “already know”. Which bridge is root, and why? Is this switch/bridge supposed to be named Charles (in charge/root). Try to put some spanning-tree protection in. If you’re not comfortable or an expert in layer 2, start by defining the core switch as a low value (eg 0 or 4096) and if you wanna be really sure, set your remote switches, who should never be elected as the root, to the highest value that the switch supports… (eg 61440). Also, make sure you are not running spanning-tree on host facing ports or on any “other” devices (eg WAPs, IRB routers, old firewalls, vm hosts, vSwitches, etc). Prune that tree kids… Prune that tree.

I hope this helps someone out or adds to your troubleshooting toolkit. Take it from me, I’m really very VERY good at injecting my own faults into networks. The key is to learn from your mistakes or the mistakes of others (see above article), adapt and move forward. The more troubleshooting you do and the more knowledge you gain throughout your career, the better and more comofrtable you will become.

— Tony Stank Stark out —

3 thoughts on “1 trap – gnireenignE esreveR”

Comments are closed.